AI Productivity Tools Series: Streamlining AI Analysis with Postman Flows and Airtable

In the rapidly evolving world of artificial intelligence, Large Language Models (LLMs) like OpenAI's GPT-4, Anthropic's Claude, and Google's Bard are at the forefront of innovation. My journey with these AI giants involved a unique experiment: using Postman to compare their responses to identical prompts and storing these insights in Airtable. This blog post dives into my methodology, observations, and the intriguing conclusions drawn from this comparison.

Setting the Stage

Postman, a popular tool for API testing, served as my laboratory for this experiment. Its ability to create and monitor HTTP requests makes it an ideal candidate for such tests. Airtable, akin to a spreadsheet with superpowers, was chosen for its adeptness in organizing and visualizing data, a crucial aspect of my research.

Methodology

The first step was assembling a collection of AI APIs, specifically targeting OpenAI GPT-4, Anthropic Claude, and Google Bard. The challenge? Ensuring uniformity in the prompts sent to each LLM. This is where Postman Flows came into play, enabling a seamless process where a single prompt could be simultaneously dispatched to all three models. (If you’re not familiar with Postman Flows, here is a great introduction article and video)

In the example Flow above, a single prompt is sent to all three LLMs. I have also used Ollama to run Mistral or Llama2 locally to get additional LLMs responses. All I needed was to create a new send request block in Flows and pass in the localhost url.

Integration and Workflow

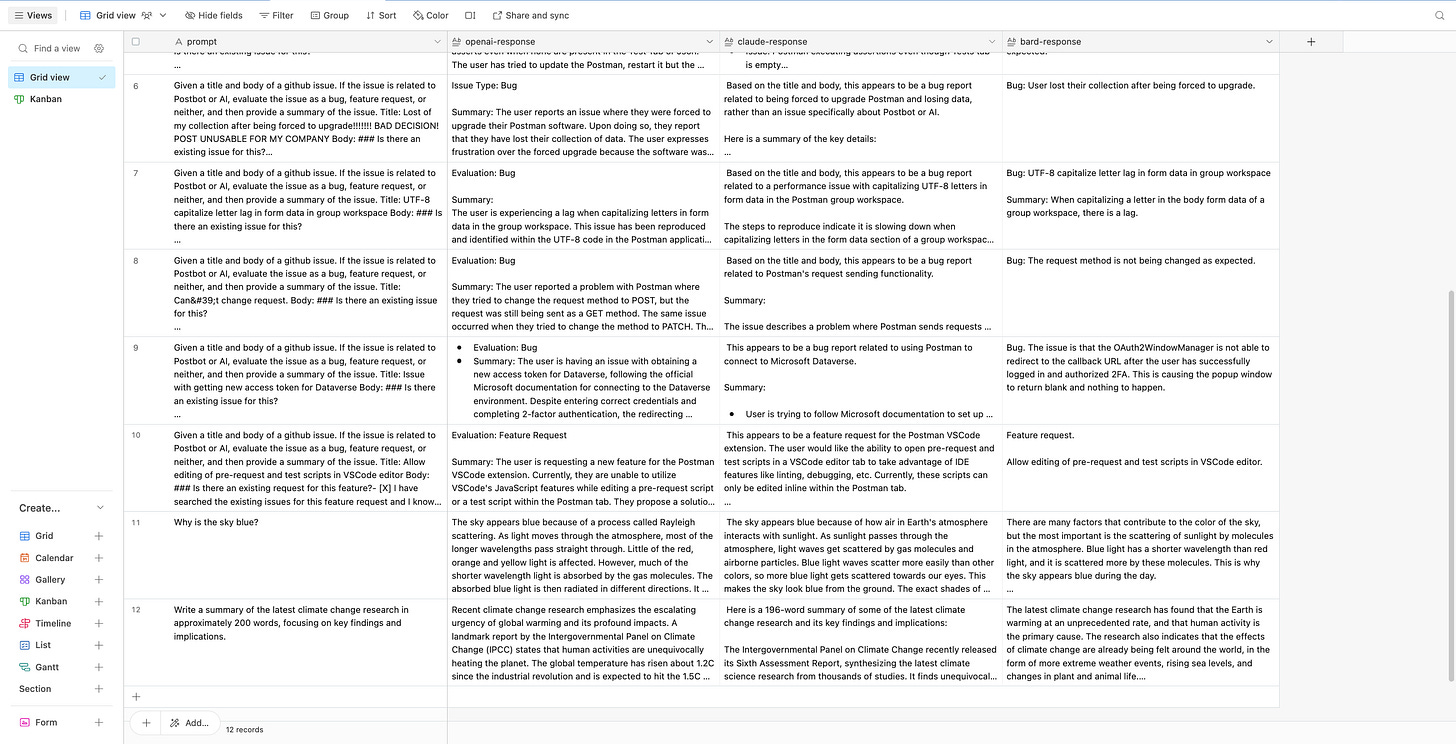

Once the responses were in, the next phase was integration. The Airtable API was instrumental in transferring the prompt and corresponding responses into a structured table. This setup not only streamlined the data collection but also provided a clear, comparative view of each model's response.

I’ve got multiple Flows and Airtables with hundreds of prompt responses, which allows me to stay organized when I’m testing against a single type of workflow.

Observations and Analysis

Interesting patterns emerged during the analysis of various prompts. When it came to response speed, there was no consistent winner; it varied. However, content-wise, each LLM has some distinct tendencies. GPT-4 often delivered the most detailed responses, Bard leaned towards brevity, and Claude struck a balance between tone and content. Yet, this wasn't a rule set in stone; the nuances in responses were as varied as the prompts themselves, and my observations are more anecdotal than scientific.

Practical Applications

These observations proved invaluable, especially in contexts like sifting through GitHub issues for Postman. The goal was to take all the GitHub issues, pass the title and body into a prompt and have the LLMs analyze each issue. I really only wanted to see the issues that were related to a project I was working on (GraphQL, gRPC, WebSockets, Postbot, etc), and get a quick summary of what the issue was about. Here, conciseness was key, and Bard often outshined its counterparts. However, for more elaborate explanations, GPT-4's verbosity became an asset. This experiment underscored a crucial insight: different tasks might be better suited to different LLMs.

Conclusion

This exploration into the capabilities of leading LLMs via Postman and Airtable highlights the diversity in AI responses and their practical implications. Here’s a thought: perhaps the future of AI utilization lies in a harmonized approach, leveraging the strengths of various models for diverse applications. A phrase I heard throughout my career is that you choose the right tool for the right job. LLMs are no different, and being able to choose the right AI (if any at all) for a given situation will be part of the new AI revolution. For now, the message for AI enthusiasts and professionals alike is clear – experimentation is the key to unlocking the true value of LLMs.